Fabrice AI is a digital representation of my thoughts based on all the content of my blog. It is meant to be an interactive, intelligent assistant capable of understanding and responding to complex queries with nuance and accuracy.

Fabrice AI began as an experiment, a personal quest to explore the potential of artificial intelligence by creating a digital version of the extensive knowledge I had shared over the years. Initially, I envisioned this as a straightforward project, something that could be completed in a matter of hours. The plan was simple: upload my content into OpenAI’s API and allow the AI to interact with it, thereby creating an accessible, intelligent assistant that could provide nuanced answers based on the wealth of information I had shared.

However, as I embarked on this journey, it quickly became apparent that the task was far more complex than I had anticipated. The project, which I thought would be a brief foray into AI, rapidly expanded into a comprehensive and intricate endeavor, requiring far more than just a superficial upload of data. This turned into a deep dive into the intricacies of AI, natural language processing, and knowledge management.

The core challenge I faced was not just about storing information, it was about teaching the AI to understand, contextualize, and accurately retrieve that information in a way that reflected the depth and nuance of my original content. This required a multi-faceted approach, as I discovered that simple methods of data storage and retrieval were insufficient for the complexity of the questions I wanted Fabrice AI to handle.

The journey took me through a wide range of approaches, from the initial attempts at using vector search indices to more advanced methods involving knowledge graphs, metadata retrieval, and custom-built AI models. Each approach had its own set of strengths and weaknesses, and each taught me something new about the complexities of AI and the nuances of digital knowledge management. I will describe in detail the technical path taken in the next blog post.

Beyond the technical issues faced, generating an exhaustive knowledge base proved challenging as well. In the early phases of testing the accuracy of the AI, it dawned on me that the most detailed and accurate answers to some questions were those I gave in video interviews or podcasts. To be accurate, I needed the knowledge base to include all my posts, video interviews, podcasts, PowerPoint presentations, images and PDF documents.

I started by transcribing all the content. Given that the automatic transcriptions are approximate to begin with, I had to make sure the AI understood the content. This took a long time as I had to test the answers for each piece of transcribed content.

Even though the transcriptions separated me from the other speaker, the AI first thought 100% of the spoken content was mine which required a lot of further training to make sure it could differentiate both speakers correctly on all the content. I also wanted Fabrice AI to give more weight to recent content. Of course, the first time I tried that it used the date at which I uploaded the content to the LLM rather than the date I originally posted the article, which required further adjustments.

For the sake of exhaustiveness, I also transcribed the knowledge in slides I shared on the blog by using the OCR model in Azure for image to text conversion then uploaded the files to the GPT assistant knowledge base. Likewise, I downloaded PDFs from WordPress’ media library and uploaded them to the knowledge base.

During beta testing, I noticed that many of my friends asked personal questions that were not covered on the blog. I am waiting to see the types of questions that people ask over the next few weeks. I will complete the answers in case they cannot be found with the existing content on my blog. Note that I am intentionally limiting the answers of Fabrice AI to the content on the blog, so you truly get Fabrice AI and not a mixture of Fabrice AI and Chat GPT.

It’s worth mentioning that I took a long-winded path to get here. I started by using GPT3 but was disappointed by the results. It kept using the wrong sources to answer the questions even though some blog posts had exactly the answer the question posed. Despite tens of hours working on the issue trying to get it to use the right content (which I will cover in the next blog post), I never got results I was satisfied with.

Things improved with GPT3.5 but were still disappointing. I then built a GPT application in the GPT Store using GPT Builder. It worked a bit better and was cheaper to operate. However, I could not get it to run on my website, and it was only available to paid subscribers of Chat GPT which I felt was too limiting. Regardless, I did not love the quality of the answers and was not comfortable releasing it to the public.

The breakthrough came with the release of GPT Assistants using model 4o. Without me needing to tell it which content to use, it just started figuring it out on its own and everything just worked better. I ditched the GPT application approach and went back to using the API so I could embed it on the blog. For the sake of exhaustiveness, I also tested Gemini, but preferred the answers given by GPT4o.

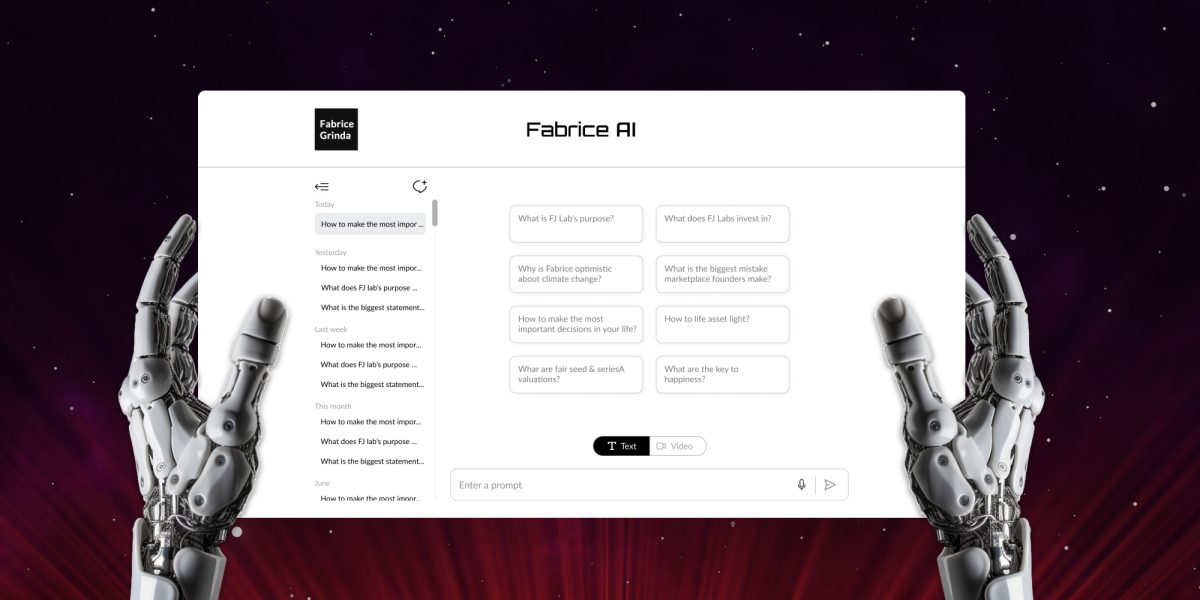

I am releasing a text only version for now. It includes a voice-to-text feature so you can ask your questions by voice. I am toying with a few ways to code an interactive version that looks and sounds like me that you can have a conversation with. I have a working prototype but am far from happy with the results and the potential cost. I want to make sure it speaks in first person, really looks and sounds like me, and does not cost an arm and a leg for me to operate.

We shall see how much progress I make in the coming months, but it might just make sense to wait for GPT5. In hindsight, I would have saved hundreds of hours of work if I had just waited for GPT4o to develop Fabrice AI. Then again, the investigation was part of the point, and it was super interesting.

In the meantime, please play with Fabrice AI and let me know what you think!